Website as list

Weird design, data spread around, cumbersome display, annoying pagination with few items and lots of pages, frequency of access, ease of filtering, ... are some of the lot of reasons why nowadays I like to view web content not as they are intended by the website they come from, but as a simple list.

That's why I have ended up building a collection of quick scripts and snippets that gets me what I want. Here are the strategies used.

Retrieving the data

How the website gets its content

The first step of the process of building your list is to understand how the data gets from the server to your browser to be rendered. Nowadays, there are usually two ways this happens. Either as the initial web page request, or as an asynchronous call later.

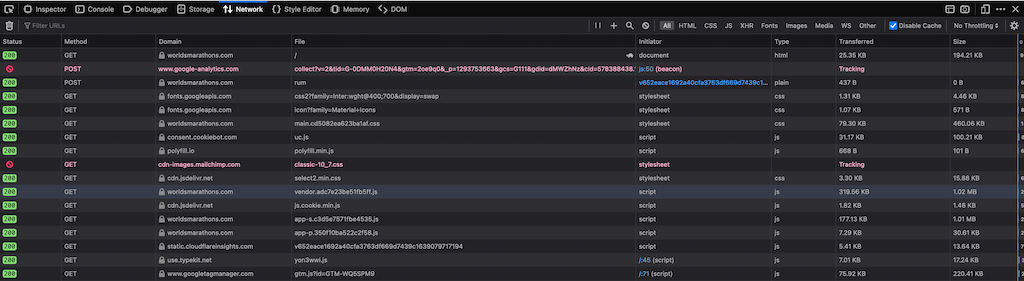

To determine how it is done, head to the network tab in your web browser and refresh the page.

Then head to the html and xhr tabs looking for the one that sends you the data you need. The developer tools allow you to preview the content response of each request. Often, you can expect it to be an XHR call when you see a small delay between when the page gets displayed on your screen and when the data gets rendered.

Reproducing the call

Once you know which call gets you the data you want, the first step is to be able to reproduce it manually, outside of the web browsing environment. For that, I use postman (https://www.postman.com) that helps test requests.

I won't get into much details here on how to reproduce the call as it is very dependent on the website. The main thing is that it is important to be able to reproduce the call outside of the web browser so that the request is as standalone as possible. The browser may set headers / cookies / ... automatically that you want to identify and reproduce later on.

The good thing with Postman is that once you get the call working, it is very easy to extract a working code from it. Click on the </> button on the sidebar to view a code you can reuse. I usually use "PHP - curl" as I am familiar with it, it works and is independent from any package.

The code looks more or less like that depending on what's needed to retrieve the page.

$curl = curl_init();

curl_setopt_array($curl, array(

CURLOPT_URL => '<THE URL>',

CURLOPT_RETURNTRANSFER => true,

CURLOPT_ENCODING => '',

CURLOPT_MAXREDIRS => 10,

CURLOPT_TIMEOUT => 0,

CURLOPT_FOLLOWLOCATION => true,

CURLOPT_HTTP_VERSION => CURL_HTTP_VERSION_1_1,

CURLOPT_CUSTOMREQUEST => 'GET',

));

$response = curl_exec($curl);

curl_close($curl);

echo $response;Setting up a caching strategy

Once I get that code, the first thing I do is set up some caching, I want to be respectful of the website as much as possible.

Here is what I use, it is not perfect, but it works and ensure I am not asking for too much from the server, especially when testing the building of the list.

<?php

// Extracting the URL from the curl array

$url = "<THE_URL>";

// Building a file to store the response content from the URL name, here also using the date if I am expecting daily changes to the data.

$file = "cache/" . md5(date("Ymd") . $url). ".json";

// Then it is simply a matter of getting the content from the file

// instead of calling the server if the file exists.

if (file_exists($file)) {

$response = file_get_contents($file);

} else {

$response = // Postman code to call server using $url

// We create the file with the response we got from the server

file_put_contents($file, $response);

}Getting what you need

From a json response

That is the ideal scenario, the server gives you something you can directly interact with in a convenient way.

$content = json_decode($response);And you are ready to interact with the data as you would with a normal php object.

From html

That is usually more annoying. The main reason being that the html contains a lot of of useless elements about the rendering of the page. The response was made to be processed by a web browser, not by your code. You'll have to look into making things work by inspecting and interacting with the DOM to build your own objects containing the data.

You may get issues with namespace. I managed to work around those in the past with :

function applyNamespace($expression)

{

return join('/ns:', explode("/", $expression));

}Wrapping each xpath query (that can be obtained through the developer tools) into a call to applyNamespace

$doc = new DOMDocument();

$doc->loadHTML($response);

$xpath = new \DOMXpath($dom);

$ns = $dom->documentElement->namespaceURI;

$xpath->registerNamespace("ns", $ns);

$element = $xpath->query($this->applyNamespace("<SOME_XPATH_QUERY>")); libxml_use_internal_errors(true); to the top of your script to avoid random warnings when loading the HTML. Retrieving multiple pages

One of the main reasons I build the list pages for myself is to not have to have to go through multiple pages manually to find the right one. So, in most cases, I need to also automate that pagination process. It is easy enough.

First step is to identify how to pagination is done on the website.

Then move your calls into a loop.

sleep(random_int(2, 12)) before curl_exec above won't slow you down much but would go a long way ensuring the server can stay available while also possible helping avoiding some detection mechanism that would block your access.For the loop, you'll need an ending condition. There are multiple ways of handling it depending on what you want. Typically one of those :

- End after a specific number of records or pages have been returned

- End when the data returned doesn't match what you want anymore

- End when a 404 Not found error code has been received

- End when there is no more record to get

Most of the time I go with the last one as it is the most reliable one that gives all of the data. The first one is great if there are way too many records, and you can sort the data returned to what you need.

It usually gives something along the lines of :

$allRecords = [];

do {

$url = "<SOME_URL>?page=$page";

// Retrieve the data for $url as above (with the caching)

// Whatever is needed to get the records into $recordsForCall

$recordsForCall = [];

$allRecords = array_merge($allRecords, $recordsForCall);

} while($recordsForCall > 0);Viewing the list

At this stage, most of the work is done, all you need is a way to display that list. I use 2 different ways depending on what I want to do with it :

- Display as html in the browser, if only to see the listing or if there isn't that much content

- Export to CSV when I need to play with the data such as having some filtering or there are a lot of records / datapoints that make it not convenient to show in the browser.

As html

Goal being to just dump the data, It is usually quite raw like

<html>

<head></head>

<body>

<table>

<thead>

<tr>

<th>Column 1</th>

<th>Column 2</th>

<th>Column 3</th>

</tr>

</thead>

<tbody>

<?php

foreach ($rows as $row) {

sprintf(

"<tr><td>%s</td><td>%s</td><td>%s</td></tr>",

$row->column1,

$row->column2,

$row->column3

);

}

?>

</tbody>

</table>

</body>

</html>Then, put on an accessible web server, I just open the page in the browser t access the list.

As spreadsheet

The easiest is to directly build a file from PHP, in the simplest case where you can assume all record have the same data, for example :

$output = "some_file.csv";

$columns = array_keys((array)$rows[0] ?? [])

// Open stream

$o = fopen($output, "w");

// Add header line

fputcsv($o, $columns);

foreach ($rows as $row) {

// Add row

fputcsv($o, lineCsv((array)$row));

}

// Close stream

fclose($o);Where lineCsv is

function lineCsv($row, $columns)

{

$return = [];

foreach ($columns as $column) {

$return[$column] = $row[$column] ?? "";

}

return $return;

}I then run the script using php -f script.php that generate a file that can be opened in any spreadsheet software afterwards.

Going further

Using this techniques, it can be easy for you to add your own filters to the code to match exactly what you want, build your own aggregator from multiple website. Those steps are intended to give you an idea on how to proceed. It won't be a one size fits all. If you need something reliable, maybe build something using tools like scrapingbee that would offer both reliability on retrieving the data, but also a common interface you can build on.